Announcing AudioSet: A Dataset for Audio Event Research

March 30, 2017

Posted by Dan Ellis, Research Scientist, Sound Understanding Team

Systems able to recognize sounds familiar to human listeners have a wide range of applications, from adding sound effect information to automatic video captions, to potentially allowing you to search videos for specific audio events. Building Deep Learning systems to do this relies heavily on both a large quantity of computing (often from highly parallel GPUs), and also – and perhaps more importantly – on significant amounts of accurately-labeled training data. However, research in environmental sound recognition is limited by currently available public datasets.

In order to address this, we recently released AudioSet, a collection of over 2 million ten-second YouTube excerpts labeled with a vocabulary of 527 sound event categories, with at least 100 examples for each category. Announced in our paper at the IEEE International Conference on Acoustics, Speech, and Signal Processing, AudioSet provides a common, realistic-scale evaluation task for audio event detection and a starting point for a comprehensive vocabulary of sound events, designed to advance research into audio event detection and recognition.

Developing an Ontology

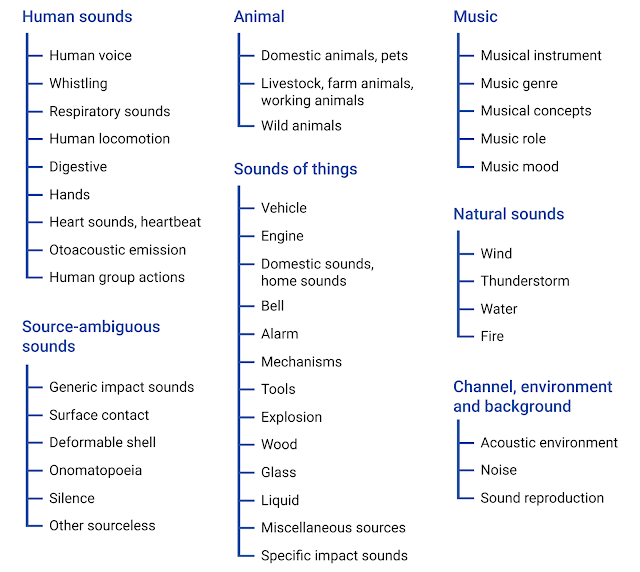

When we started on this work last year, our first task was to define a vocabulary of sound classes that provided a consistent level of detail over the spectrum of sound events we planned to label. Defining this ontology was necessary to avoid problems of ambiguity and synonyms; without this, we might end up trying to differentiate “Timpani” from “Kettle drum”, or “Water tap” from “Faucet”. Although a number of scientists have looked at how humans organize sound events, the few existing ontologies proposed have been small and partial. To build our own, we searched the web for phrases like “Sounds, such as X and Y”, or “X, Y, and other sounds”. This gave us a list of sound-related words which we manually sorted into a hierarchy of over 600 sound event classes ranging from “Child speech” to “Ukulele” to “Boing”. To make our taxonomy as comprehensive as possible, we then looked at comparable lists of sound events (for instance, the Urban Sound Taxonomy) to add significant classes we may have missed and to merge classes that weren't well defined or well distinguished. You can explore our ontology here.

|

| The top two levels of the AudioSet ontology. |

With our new ontology in hand, we were able to begin collecting human judgments of where the sound events occur. This, too, raises subtle problems: unlike the billions of well-composed photographs available online, people don’t typically produce “well-framed” sound recordings, much less provide them with captions. We decided to use 10 second sound snippets as our unit; anything shorter becomes very difficult to identify in isolation. We collected candidate snippets for each of our classes by taking random excerpts from YouTube videos whose metadata indicated they might contain the sound in question (“Dogs Barking for 10 Hours”). Each snippet was presented to a human labeler with a small set of category names to be confirmed (“Do you hear a Bark?”). Subsequently, we proposed snippets whose content was similar to examples that had already been manually verified to contain the class, thereby finding examples that were not discoverable from the metadata. Because some classes were much harder to find than others – particularly the onomatopoeia words like “Squish” and “Clink” – we adapted our segment proposal process to increase the sampling for those categories. For more details, see our paper on the matching technique.

AudioSet provides the URLs of each video excerpt along with the sound classes that the raters confirmed as present, as well as precalculated audio features from the same classifier used to generate audio features for the updated YouTube 8M Dataset. Below is a histogram of the number of examples per class:

|

| The total number of videos for selected classes in AudioSet. |

|

| A few of the segments representing the class “Violin, fiddle”. |

Once we had a substantial set of human ratings, we conducted an internal Quality Assessment task where, for most of the classes, we checked 10 examples of excerpts that the annotators had labeled with that class. This revealed a significant number of classes with inaccurate labeling: some, like “Dribble” (uneven water flow) and “Roll” (a hard object moving by rotating) had been systematically confused (as basketball dribbling and drum rolls, respectively); some such as “Patter” (footsteps of small animals) and “Sidetone” (background sound on a telephony channel) were too difficult to label and/or find examples for, even with our content-based matching. We also looked at the behavior of a classifier trained on the entire dataset and found a number of frequent confusions indicating separate classes that were not really distinct, such as “Jingle” and “Tinkle”.

To address these “problem” classes, we developed a re-rating process by labeling groups of classes that span (and thus highlight) common confusions, and created instructions for the labeler to be used with these sounds. This re-rating has led to multiple improvements – merged classes, expanded coverage, better descriptions – that we were able to incorporate in this release. This iterative process of labeling and assessment has been particularly effective in shaking out weaknesses in the ontology.

A Community Dataset

By releasing AudioSet, we hope to provide a common, realistic-scale evaluation task for audio event detection, as well as a starting point for a comprehensive vocabulary of sound events. We would like to see a vibrant sound event research community develop, including through external efforts such as the DCASE challenge series. We will continue to improve the size, coverage, and accuracy of this data and plan to make a second release in the coming months when our rerating process is complete. We additionally encourage the research community to continue to refine our ontology, which we have open sourced on GitHub. We believe that with this common focus, sound event recognition will continue to advance and will allow machines to understand sounds similar to the way we do, enabling new and exciting applications.

Acknowledgments:

AudioSet is the work of Jort F. Gemmeke, Dan Ellis, Dylan Freedman, Shawn Hershey, Aren Jansen, Wade Lawrence, Channing Moore, Manoj Plakal, and Marvin Ritter, with contributions from Sami Abu-El-Hajia, Sourish Chaudhuri, Victor Gomes, Nisarg Kothari, Dick Lyon, Sobhan Naderi Parizi, Paul Natsev, Brian Patton, Rif A. Saurous, Malcolm Slaney, Ron Weiss, and Kevin Wilson.