An Augmented Reality Microscope for Cancer Detection

April 16, 2018

Posted by Martin Stumpe, Technical Lead and Craig Mermel, Product Manager, Google Brain Team

(Updated Aug 12, 2019: The work described in this blogpost has been published in Nature Medicine.)

Applications of deep learning to medical disciplines including ophthalmology, dermatology, radiology, and pathology have recently shown great promise to increase both the accuracy and availability of high-quality healthcare to patients around the world. At Google, we have also published results showing that a convolutional neural network is able to detect breast cancer metastases in lymph nodes at a level of accuracy comparable to a trained pathologist. However, because direct tissue visualization using a compound light microscope remains the predominant means by which a pathologist diagnoses illness, a critical barrier to the widespread adoption of deep learning in pathology is the dependence on having a digital representation of the microscopic tissue.

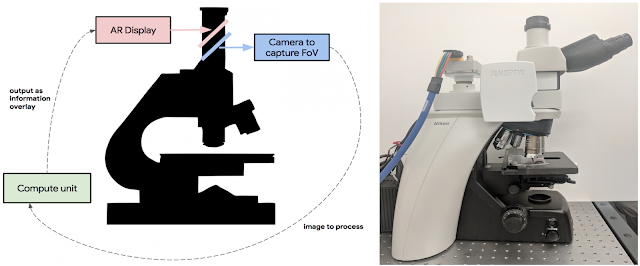

Today, in a talk delivered at the Annual Meeting of the American Association for Cancer Research (AACR), with an accompanying paper “An Augmented Reality Microscope for Real-time Automated Detection of Cancer”, we describe a prototype Augmented Reality Microscope (ARM) platform that we believe can possibly help accelerate and democratize the adoption of deep learning tools for pathologists around the world. The platform consists of a modified light microscope that enables real-time image analysis and presentation of the results of machine learning algorithms directly into the field of view. Importantly, the ARM can be retrofitted into existing light microscopes found in hospitals and clinics around the world using low-cost, readily-available components, and without the need for whole slide digital versions of the tissue being analyzed.

Modern computational components and deep learning models, such as those built upon TensorFlow, will allow a wide range of pre-trained models to run on this platform. As in a traditional analog microscope, the user views the sample through the eyepiece. A machine learning algorithm projects its output back into the optical path in real-time. This digital projection is visually superimposed on the original (analog) image of the specimen to assist the viewer in localizing or quantifying features of interest. Importantly, the computation and visual feedback updates quickly — our present implementation runs at approximately 10 frames per second, so the model output updates seamlessly as the user scans the tissue by moving the slide and/or changing magnification.

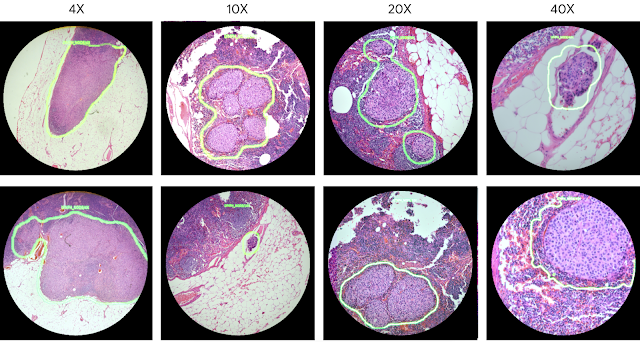

As a demonstration of the potential utility of the ARM, we configured it to run two different cancer detection algorithms: one that detects breast cancer metastases in lymph node specimens, and another that detects prostate cancer in prostatectomy specimens. These models can run at magnifications between 4-40x, and the result of a given model is displayed by outlining detected tumor regions with a green contour. These contours help draw the pathologist’s attention to areas of interest without obscuring the underlying tumor cell appearance.

|

| Example view through the lens of the ARM. These images show examples of the lymph node metastasis model with 4x, 10x, 20x, and 40x microscope objectives. |

We believe that the ARM has potential for a large impact on global health, particularly for the diagnosis of infectious diseases, including tuberculosis and malaria, in developing countries. Furthermore, even in hospitals that will adopt a digital pathology workflow in the near future, ARM could be used in combination with the digital workflow where scanners still face major challenges or where rapid turnaround is required (e.g. cytology, fluorescent imaging, or intra-operative frozen sections). Of course, light microscopes have proven useful in many industries other than pathology, and we believe the ARM can be adapted for a broad range of applications across healthcare, life sciences research, and material science. We’re excited to continue to explore how the ARM can help accelerate the adoption of machine learning for positive impact around the world.