Recognizing Pose Similarity in Images and Videos

January 14, 2021

Posted by Jennifer J. Sun, Student Researcher and Ting Liu, Senior Software Engineer, Google Research

Everyday actions, such as jogging, reading a book, pouring water, or playing sports, can be viewed as a sequence of poses, consisting of the position and orientation of a person’s body. An understanding of poses from images and videos is a crucial step for enabling a range of applications, including augmented reality display, full-body gesture control, and physical exercise quantification. However, a 3-dimensional pose captured in two dimensions in images and videos appears different depending on the viewpoint of the camera. The ability to recognize similarity in 3D pose using only 2D information will help vision systems better understand the world.

In “View-Invariant Probabilistic Embedding for Human Pose” (Pr-VIPE), a spotlight paper at ECCV 2020, we present a new algorithm for human pose perception that recognizes similarity in human body poses across different camera views by mapping 2D body pose keypoints to a view-invariant embedding space. This ability enables tasks, such as pose retrieval, action recognition, action video synchronization, and more. Compared to existing models that directly map 2D pose keypoints to 3D pose keypoints, the Pr-VIPE embedding space is (1) view-invariant, (2) probabilistic in order to capture 2D input ambiguity, and (3) does not require camera parameters during training or inference. Trained with in-lab setting data, the model works on in-the-wild images out of the box, given a reasonably good 2D pose estimator (e.g., PersonLab, BlazePose, among others). The model is simple, results in compact embeddings, and can be trained (in ~1 day) using 15 CPUs. We have released the code on our GitHub repo.

|

| Pr-VIPE can be directly applied to align videos from different views. |

Pr-VIPE

The input to Pr-VIPE is a set of 2D keypoints, from any 2D pose estimator that produces a minimum of 13 body keypoints, and the output is the mean and variance of the pose embedding. The distances between embeddings of 2D poses correlate to their similarities in absolute 3D pose space. Our approach is based on two observations:

- The same 3D pose may appear very different in 2D as the viewpoint changes.

- The same 2D pose can be projected from different 3D poses.

The first observation motivates the need for view-invariance. To accomplish this, we define the matching probability, i.e., the likelihood that different 2D poses were projected from the same, or similar 3D poses. The matching probability predicted by Pr-VIPE for matching pose pairs should be higher than for non-matching pairs.

To address the second observation, Pr-VIPE utilizes a probabilistic embedding formulation. Because many 3D poses can project to the same or similar 2D poses, the model input exhibits an inherent ambiguity that is difficult to capture through deterministic mapping point-to-point in embedding space. Therefore, we map a 2D pose through a probabilistic mapping to an embedding distribution, of which we use the variance to represent the uncertainty of the input 2D pose. As an example, in the figure below the third 2D view of the 3D pose on the left is similar to the first 2D view of a different 3D pose on the right, so we map them into a similar location in the embedding space with large variances.

View-Invariance

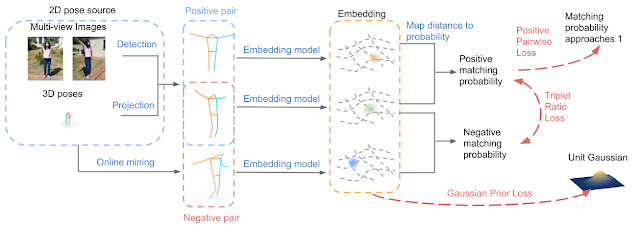

During training, we use 2D poses from two sources: multi-view images and projections of groundtruth 3D poses. Triplets of 2D poses (anchor, positive, and negative) are selected from a batch, where the anchor and positive are two different projections of the same 3D pose, and the negative is a projection of a non-matching 3D pose. Pr-VIPE then estimates the matching probability of 2D pose pairs from their embeddings.

During training, we push the matching probability of positive pairs to be close to 1 with a positive pairwise loss in which we minimize the embedding distance between positive pairs, and the matching probability of negative pairs to be small by maximizing the ratio of the matching probabilities between positive and negative pairs with a triplet ratio loss.

Probabilistic Embedding

Pr-VIPE maps a 2D pose to a probabilistic embedding as a multivariate Gaussian distribution using a sampling-based approach for similarity score computation between two distributions. During training, we use a Gaussian prior loss to regularize the predicted distribution.

Evaluation

We propose a new cross-view pose retrieval benchmark to evaluate the view-invariance property of the embedding. Given a monocular pose image, cross-view retrieval aims to retrieve the same pose from different views without using camera parameters. The results demonstrate that Pr-VIPE retrieves poses more accurately across views compared to baseline methods in both evaluated datasets (Human3.6M, MPI-INF-3DHP).

|

| Pr-VIPE retrieves poses across different views more accurately relative to the baseline method (3D pose estimation). |

Common 3D pose estimation methods (such as the simple baseline used for comparison above, SemGCN, and EpipolarPose, amongst many others), predict 3D poses in camera coordinates, which are not directly view-invariant. Thus, rigid alignment between every query-index pair is required for retrieval using estimated 3D poses, which is computationally expensive due to the need for singular value decomposition (SVD). In contrast, Pr-VIPE embeddings can be directly used for distance computation in Euclidean space, without any post-processing.

Applications

View-invariant pose embedding can be applied to many image and video related tasks. Below, we show Pr-VIPE applied to cross-view retrieval on in-the-wild images without using camera parameters.

The same Pr-VIPE model can also be used for video alignment. To do so, we stack Pr-VIPE embeddings within a small time window, and use the dynamic time warping (DTW) algorithm to align video pairs.

|

| Manual video alignment is difficult and time-consuming. Here, Pr-VIPE is applied to automatically align videos of the same action repeated from different views. |

The video alignment distance calculated via DTW can then be used for action recognition by classifying videos using nearest neighbor search. We evaluate the Pr-VIPE embedding using the Penn Action dataset and demonstrate that using the Pr-VIPE embedding without fine-tuning on the target dataset, yields highly competitive recognition accuracy. In addition, we show that Pr-VIPE even achieves relatively accurate results using only videos from a single view in the index set.

|

| Pr-VIPE recognizes action across views using pose inputs only, and is comparable to or better than methods using pose only or with additional context information (such as Iqbal et al., Liu and Yuan, Luvizon et al., and Du et al.). When action labels are only available for videos from a single view, Pr-VIPE (1-view only) can still achieve relatively accurate results. |

Conclusion

We introduce the Pr-VIPE model for mapping 2D human poses to a view-invariant probabilistic embedding space, and show that the learned embeddings can be directly used for pose retrieval, action recognition, and video alignment. Our cross-view retrieval benchmark can be used to test the view-invariant property of other embeddings. We look forward to hearing about what you can do with pose embeddings!

Acknowledgments

Special thanks to Jiaping Zhao, Liang-Chieh Chen, Long Zhao (Rutgers University), Liangzhe Yuan, Yuxiao Wang, Florian Schroff, Hartwig Adam, and the Mobile Vision team for the wonderful collaboration and support.